A custom

robots.txt file is a simple text file in which the website owner uses

to write the commands for the web crawlers to do some certain activities

whether to crawler or not. That commands are written in different coding which

can only be read by web crawlers.

Also Check Out: How to Add Google Custom Search Engine to Blogger Blog

Ankit Singla in his blog master blogging explains Blogger Robots.txt file as a text file which contains few lines of simple code. It is saved on the website or blog's server which instruct the web crawlers on how to index and crawl your blog in the search results.

Also Read: How to Schedule Blog Posts for Auto Posting in Blogger

You can Check Your Blog Robots.Txt File by this link:

The benefit of adding a robot text file in blogger They are a lot of advantage in adding robot text in your blogger blog some of those are:

- It makes your site to be easily found in search engines

- It makes your site article found in the search engine with relevant keyword

You can Check Your Blog Robots.Txt File by this link:

- It makes your site to be easily found in search engines

- It makes your site article found in the search engine with relevant keyword

Enabling Robots.txt File in Blogger:

This process is so easy than you can imagine all that you need is to follow the simple steps below:

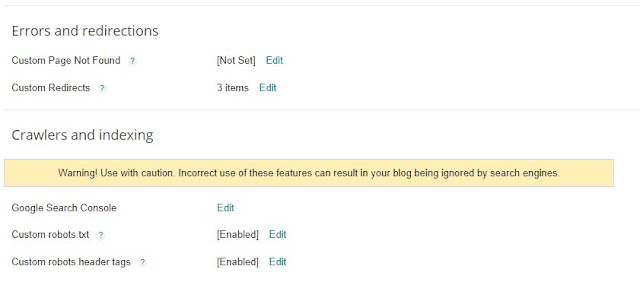

Go To Blogger >> Settings >> Search Preferences

Look For Custom Robots.Txt Section In The Bottom and Edit It.

Now a checkbox will appear tick "Yes" and a box will appear where you have to write the robots.txt file. Enter this:

User-agent: Mediapartners-GoogleUser-agent: *Disallow: /search?q=*Disallow: /*?updated-max=*Allow: /Sitemap: http://www.yourdomain.com/feeds/posts/default?orderby=updated

Note: The first line "User-agent: Media partners-Google" is for Google AdSense. So if you are using Google AdSense in your blog then remain it same otherwise remove. it.

Click "Save Changes".

User-agent: Media partners-Google: This is a first command which is used for Google AdSense enabled blogs if you are not using Google AdSense then remove it. In this command, we're telling to crawl all pages where AdSense Ads are placed!

User-agent: *: Here the User-agent is calling the robot and * is for all the search engine's robots like Google, Bing, etc.

Disallow: /search?q=*: This line tells the search engine's crawler not to crawl the search pages.

Disallow: /*?updated-max=*: This one disallows the search engine's crawler to do not index or crawl label or navigation pages.

Allow: /: This one allows to index the homepage or your blog.

Sitemap: So this last command tells the search engine's crawler to index the every new or updated post

You can also add your own command to disallow or allow more pages. For Example: if you want to disallow or allow a page then you can use these commands:

Allow: /p/contact.html

Go To Blogger >> Settings >> Search Preferences

Look For Custom Robots.Txt Section In The Bottom and Edit It.

User-agent: Mediapartners-GoogleUser-agent: *Disallow: /search?q=*Disallow: /*?updated-max=*Allow: /Sitemap: http://www.yourdomain.com/feeds/posts/default?orderby=updated

To Disallow:

Disallow: /p/contact.html

I hope you have learned how to add the robots.txt file in your blogger blog! If you are facing any difficulty, please let me know in the comment box below. It’s your turn to say thanks in comments and keep sharing this post till then Peace, Blessings, and Happy Adding!

I hope you have learned how to add the robots.txt file in your blogger blog! If you are facing any difficulty, please let me know in the comment box below. It’s your turn to say thanks in comments and keep sharing this post till then Peace, Blessings, and Happy Adding!

Usually there is certainly the miscalculation or mistake in calculating the overtime that the employee worked

ReplyDeleteas a chef that might spark a delay in fully compensating an employee.

This is where many newbies will quit and seek out a way to earn money from home.

One sure fire way to realize an enhancement in productivity and profitability would be to implement a well-designed Auto Repair Shop Management software program that fully compliments how we manage your

shop.